Attack of the zombie bot?

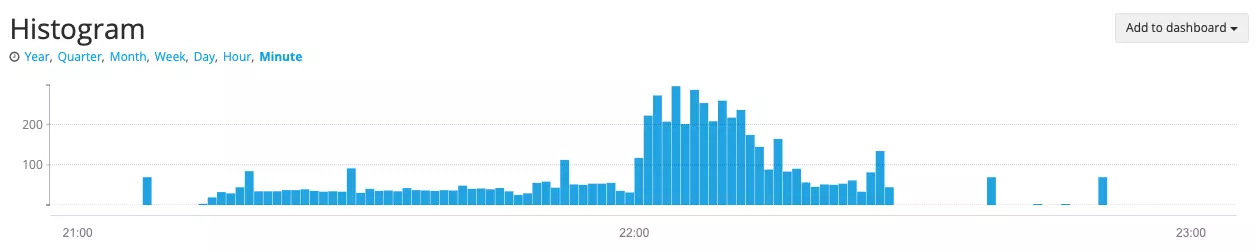

We were amazed when we saw massive hits from the Seekport Bot on our systems.

The older ones will remember: Seekport was a German search engine that started in the mid-2000s as an alternative to Google. Unfortunately, she didn't make it - insolvency proceedings were opened in 2009. A zombie bot?

We noticed the bot because it didn't stick to the rel="noindex" markup in the HTML and also started massive downloads. Because a good bot sticks to it and doesn't do exactly that.

A quick search revealed: The domain Seekport has been bought by Sistrix. Apparently, Sistrix operates a new search engine under the name Seekport or uses the "known" bot for its own spiders.

In this specific case, the bot - because it did not comply with the label - caused us to download so many binary files that we had to act: we locked the bot out. In addition to an entry in robots.txt, we also locked out the bot at server level, directly in the varnish.

This was very easy with the following entry in the Varnish Config:

sub vcl_recv {

......

# block for secific user agent

# e.g. seekport, which does not follow

# the rel="noindex" tag

if (req.http.User-Agent ~ "Seekport") {

return(synth(403,"Forbidden."));

}

.....

}We then entered this entry in our Puppet configuration, created a tag and the adjustment could already be rolled out for the affected service. You can verify that the bot is actually locked out using cURL:

curl -I --user-agent "Mozilla/5.0 (compatible; Seekport Crawler; seekport.com/)" \

www.domain.nameThe result is:

HTTP/1.1 403 Forbidden.

date: Wed, 14 Jul 2021 06:58:02 GMT

server: Varnish

x-varnish: 1421139

content-type: text/html; charset=utf-8

content-length: 782At the moment we are still checking whether we should block the bot in all our services. If we can find more information about the bot and it adheres to the markup in the HTML, we'll check again.

Maybe the guys from Sistrix can give some more information as a comment?

Please feel free to share this article.

Comments

No comments yet.